SQL anti-patterns are an often misunderstood concept. One very basic anti-pattern is a leading wildcard character in a textual search. It can have really bad effects on a large table and yet there are always instances of it becoming a requirement. You see this all too often especially when reporting style queries are run on a transactional database. Lets say that for some reason we wanted to find all of our customers whose surnames end in “ith” like Smith for instance. We might write a query like the one below: –

[code language=”sql”]

SELECT FirstName, LastName, DOB

FROM dbo.table1

WHERE col1 LIKE LastName ‘%ith’;

[/code]

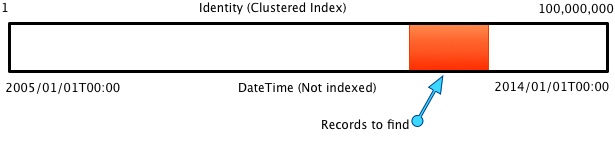

Even if we have an index on LastName the best we can do is to scan the index. An index in SQL Server, much like an index in a phone directory, is of little help to us here. If you were to try and find every surname in a phone book that ended with “ith” you’d have to read every entry in the index. Ok that’s still better than looking at every page in the phonebook. Although our query requests 3 columns which may not be in the index and therefore the lookups to the actual data may actually make the index scan and lookup slower than a scan of the entire data.

Anyway onto something quite trivial that can speed up such a query. If the term collation in this posts title jumped out at you as a potential performance improvement then you’re correct. Of course we could also use a full text index but that carries overhead and all sorts of other joys. Lets assume that either this isn’t a common query or you’re low on disk space and full text indexes are not an option just for this post. I might cover those in another post at some other point. A collation is a way of ordering something in our case the text that we are storing and the way that SQL Server can use different collations affects the query above. Let’s look at an example, it’s quite a long script so I’d suggest you copy and paste it and then read along after: –

[code language=”sql”]

SET NOCOUNT ON;

GO

IF OBJECT_ID( N’tempdb..#results’, ‘U’ ) IS NOT NULL DROP TABLE #results;

GO

CREATE TABLE #results( duration INT, run VARCHAR(30) );

— Create a test DB

USE [master];

GO

IF( SELECT DATABASEPROPERTYEX(N’TestDB’, ‘Collation’) ) IS NOT NULL DROP DATABASE TestDB;

GO

CREATE DATABASE [TestDB]

ON PRIMARY

( NAME = N’TestDB’, FILENAME = N’C:\SQLData\DataFiles\TestDB.mdf’ , SIZE = 20480KB , FILEGROWTH = 20480KB )

LOG ON

( NAME = N’TestDB_log’, FILENAME = N’C:\SQLData\LogFiles\TestDB_log.ldf’ , SIZE = 20480KB , FILEGROWTH = 20480KB )

GO

USE [TestDB];

GO

— Create and populate a relation

IF OBJECT_ID( N’dbo.LoadsOfData’, ‘U’ ) IS NOT NULL DROP TABLE dbo.LoadsOfData;

GO

CREATE TABLE dbo.LoadsOfData(

id INT IDENTITY PRIMARY KEY CLUSTERED

, filler CHAR(200) NOT NULL DEFAULT ‘a’ COLLATE SQL_LATIN1_GENERAL_CP1_CI_AS — Notice the normal collation

);

GO

WITH n( n ) AS(

SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL

SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1

), vals( n ) AS (

SELECT ROW_NUMBER() OVER( ORDER BY n.n ) – 1

FROM n, n n1, n n2, n n3, n n4, n n5

)

INSERT INTO dbo.LoadsOfData( filler )

SELECT REPLICATE( CHAR( 48 + n % 10 ) + CHAR( 65 + n % 26 ) + CHAR( 97 + (n + 1) % 26 ) +

CHAR( 48 + (n + 3) % 10 ) + CHAR( 65 + (n + 5) % 26 ) + CHAR( 97 + (n + 7) % 26 )

, 30 )

FROM vals;

GO

— Time something that will scan

DECLARE @start DATETIME2( 7 ) = SYSDATETIME(), @a INT;

SELECT @a = COUNT(*)

FROM dbo.LoadsOfData

WHERE filler LIKE ‘%e%’;

SELECT @a = DATEDIFF( mcs, @start, SYSDATETIME() );

INSERT INTO #results( duration, run ) VALUES( @a, ‘With normal collation’ );

GO

— Change the collation

ALTER TABLE dbo.LoadsOfData ALTER COLUMN filler CHAR(200) COLLATE LATIN1_GENERAL_BIN; — Now make the column a binary collation

GO

— Try again

DECLARE @start DATETIME2( 7 ) = SYSDATETIME(), @a INT;

SELECT @a = COUNT(*)

FROM dbo.LoadsOfData

WHERE filler LIKE ‘%e%’;

SELECT @a = DATEDIFF( mcs, @start, SYSDATETIME() );

INSERT INTO #results( duration, run ) VALUES( @a, ‘With binary collation’ );

GO

— Yes the applied selects are inefficint but they take less space 🙂

SELECT duration, run

FROM #results

UNION ALL

SELECT (select duration from #results where run like ‘%normal%’) / (select duration from #results where run like ‘%binary%’), ‘Improvement multiple’;

[/code]

So lets go over what we’re doing for clarity.

- First we drop and then create a test database to use, always good to clean up

- We create a temp table to hold the results

- Create a table to use and populate some nonsensical test data

- Now we run our wildcard query and get the execution time. Note that we don’t return any results to the client so this is basically the execution as best as we can get it. We get the duration in microseconds

- Then we change the column to use a binary collation

- Now we re-run our test in the same way as before and again store the results in our temp table

- Finally we get the durations and a difference from the results table. I don’t care about the inefficiency here 🙂

As you can see, even with this small table there is a remarkable difference in duration and we see an improvement of about 15 times. This is better than I was expecting, I was expecting nearer 8 times which is more usual but the point is well made by the difference. Also in this case we didn’t have an index on the text column but because that is by far the majority of the data anyway it wouldn’t make that much difference.