I’m sure you can all remember one of those interview questions about divide and conquer. Well it turned out that I tried to find a documented solution to just such a problem in T-SQL the other day and couldn’t. Long story short I decided to document it for myself and anyone else who finds it useful. The script is at the end if you don’t want to read the background and why it was useful.

A little background first. I was working for a company that had some rather large tables (Hundreds of GBs and hundreds of millions of rows). To make matters worse the tables were often heavily accessed. It was a data warehouse being used more like an OLTP environment. This presented a number of problems. One such problem was that if you didn’t have the right indexes on a table then you’d have difficulties creating it. Given the size of their already monolithic database no-one wanted to see another index added for a few bad queries.

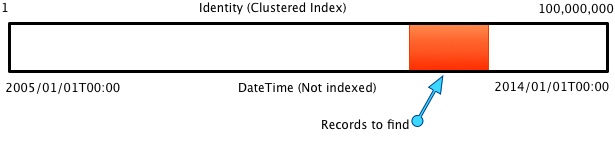

One of these queries included a regular request to look up a range of dates in an audit table. Unfortunately someone had made the decision not to put an index on the datetime field. Fortunately the table was clustered on an identity. This made for a nice situation which wasn’t too hard to solve. The table, as previously mentioned, was an audit table which meant that both the identity and the datetime of modification were written to with incremental values, they were surrogates of one another.

The solution was to go to Google/Bing and look up “T-SQL binary search”. Unfortunately when I did so that wasn’t much help. I didn’t need a description of the solution, I wanted a script. One I could easily modify would be pretty cool, one that worked out of the box rather better. A little visualization might be helpful.

So at present we can only scan the cluster, well without the solution I’m about to describe anyway. This is obviously a very costly operation. What’s even worse is that an index on datetime still wouldn’t itself lead to an ideal plan as I’ll show later on. In any case we don’t have a helpful index so let’s get creative.

First a few assumptions, some of these are for simplicity and some will become clear later: –

- There are no gaps in the identity column (Makes the script easier, please note SQL Server makes no such guarantee)

- As stated this works because both identity and datetime are ever increasing

- The cluster is a depth 4 index (It was in my case)

- An index on the datetime column would be depth 4

- Assume we’re trying to read 100,000 records

- Assume we have 50 rows per data page

- The range of required records is contiguous, ie between two dates (as was the case for me)

A pseudo code solution is provided below: –

- Let T be our target datetime value

- Get the sum of min and max values for our range

- Initially this is min and max from table, thereafter we move the incorrect value

- Divide the sum by 2 to get the halfway point

- Read through the cluster and get the datetime (Value X)

- Get the datetime of the next record along (Value Y)

- If X < T and Y >= T we have a match, break out of the loop

- If X > T make our new max value equal to X

- If Y < T make our new min value equal to Y

- Go to 2 with min or max value altered

We can follow the above twice, once for the starting value and once for the ending value. This will give us a range to scan in the cluster. Let’s have a think about the I/O requirements here and compare them to other options.

Clustered index scan = 100M records at 50 records per page = 2M reads. That’s not good.

Let’s examine a hypothetical index for the datetime field: –

- If we had an index on datetime (We don’t remember) and it was a depth 4 index with 404 records per index page then we would get the below I/O: –

- 4 reads to find the first record

- 223 pages in a partial index scan

- 400,000 reads through the cluster (Most would be in memory) as we loop join from the index into the cluster

Total = 400,227 reads.

Ok that’s a lot better but is it the best?

Let’s look at the binary search and scan.

- We have to do 8 reads through the cluster to get the min and max values for the identity column of the table.

- We then need to calculate the mid-point (No reads).

- From there we have to pull out 2 successive values at 8 reads for both values. This is done repeatedly until the record is found. This is deterministic, with an integer it cannot be more than 32 times, with a bigint not more than 64. In our case 27 trips will suffice so that’s 216 reads

- We then need to repeat this for the upper value (The min and max could be stored but it’s not worth it so my script doesn’t).

Total so far (8 + 216) * 2 = 448 - Now we scan the cluster, 4 reads to get to the correct record and 2,000 pages in the cluster need to be scanned.

Total = 2,452 WOW! (815 times better than the clustered index scan and 163 times better than the datetime index). To top that off we didn’t pollute the buffer pool anywhere near as much.

We just did a lot better than the index we might have wanted to create. Not only that but we also didn’t need the overhead of storing that other index on datetime nor the overhead of keeping it up to date. This was just a simple example of how a potentially poor schema decision lead to a much better solution through a little engineering and creativity. All those interview questions really do make sense and have their uses 🙂

For anyone who thought about it, yes you can solve this with even fewer reads, if we had a datetime index and looked up the min and max values we could get the cluster keys from that. In that case we would have the below situation: –

- Non clustered seek, 4 reads, to get the minimum ID value.

- Non clustered seek, 4 reads, to get the maximum ID value.

- Clustered seek, 4 reads, to get to the first page to start our scan.

- Scan along the cluster, 2,000 reads, to get all the data.

The total here is 2,012 pages. However we have the extra space for the index, the logging overhead and the writes to the index to keep it up to date. Yes it’s more useful, but in my case not worth it.

Summary

| Option | Page reads | % of table | Index overhead |

|---|---|---|---|

| Table scan | 2,000,000 | 100% | No |

| Index seek and lookup | 400,227 | 20.0% | Yes |

| Binary search and partial scan | 2,452 | 0.123% | No |

| Manual seek and partial scan | 2,012 | 0.1% | Yes |

Great post, I had a very similar problem – and came up with the exact same approach. Then a bit of searching confirmed I’m not alone 😉

Often with very large tables that may not be indexed according to how you want to search can be quite problematic, but with an incrementing identity it can be overcome!

Thank you, I was surprised that when I asked other people for this kind of script and googled for it I couldn’t find one. Maybe were at a point where people expect computing power alone to solve our issues. Either way as a company I dealt with a while back put it “Engineering, the unfair advantage!” 🙂

Pingback: Divide and Conquer | SQLDoubleG